The problem of scammers exploiting social media platforms continues to persist. Meta has yet to fulfill all the recommendations made last year by experts from the CERT Polska team at NASK, which were intended to enhance the safety of Polish social media users.

On December 5, 2024, we published CERT Polska expectations from Meta regarding the problem of fraud on its social media platforms. These expectations were also conveyed to Meta representatives and served as the basis for further discussions on improving the security of the platform's advertising mechanisms.

– NASK stands guard over the security of Polish internet users. We need cooperation from the owners of internet platforms like Meta to achieve this. We expect that the solutions we have presented will be implemented without delay – emphasizes Radosław Nielek, director of the NASK institute.

After the December meeting, we committed to monitoring whether the expectations of CERT Polska experts were being met. Three months after the meeting, the actions taken by Meta have not resolved the issues we observed, which were detailed in the publication Ad fraud on large online platforms.

Meta has already stated that it will not implement the request to automatically block content related with domains included on the CERT Polska Warning List. There are also no planned changes to how content is indexed in the Ad Library. The changes in handling content reports from regular accounts are also not considered satisfactory. As for the request to expand the team of Polish-speaking moderators, there has been no response indicating whether any action has been taken. Meta has also not addressed the issue of proactively blocking accounts and pages that publish harmful ads.

Detecting harmful content, including in Polish

In relation to this point, we anticipated that Meta would present a plan for implementing an effective method to detect content in Polish. Instead, we were provided with proposals for solutions that are currently being rolled out globally and may also be applicable to Polish users.

Among the proposed solutions, a key idea was the use of facial recognition technology to enable Meta’s systems to automatically identify the use of celebrity images in fraudulent advertisements. This technology can also assist users in recovering access to compromised accounts. More information about this technology can be found on Meta's website: https://www.meta.com/help/policies/1265316287987507/.

Although there were initial concerns that data protection and biometric data regulations might prevent Meta from implementing this solution in Poland, on March 4, 2025, the company announced that it had successfully introduced the solution within the European Union as well (https://about.fb.com/news/2024/10/testing-combat-scams-restore-compromised-accounts/). Public figures will receive notifications in the coming days with the option to provide their data to protect against the use of their image in content generated by scammers.

At the same time, Meta announced its intention to encourage businesses to make greater use of the existing "Brand Rights Protection" program, which enables the platform to automatically detect unauthorized use of registered logos and trademarks: https://www.facebook.com/business/help/828925381043253.

In our opinion, implementing mechanisms focused on recognizing the use of images of trusted individuals and brands is a step in the right direction, but the proposed solution may prove insufficient. One possible scenario is the use of faces of individuals who are not registered in the system. Criminals actively monitor platform responses and can easily notice that impersonating certain individuals and brands encounters less effective moderation than in other cases.

We should particularly keep in mind that in the mechanisms designed by Meta, registration occurs at the initiative of the given celebrity or company. Meta points out, among other things, legal limitations related to the processing of biometric data, which require obtaining individual consent. This makes it significantly challenging to gather a sufficiently extensive database of images and logos to effectively reduce the scale of fraud.

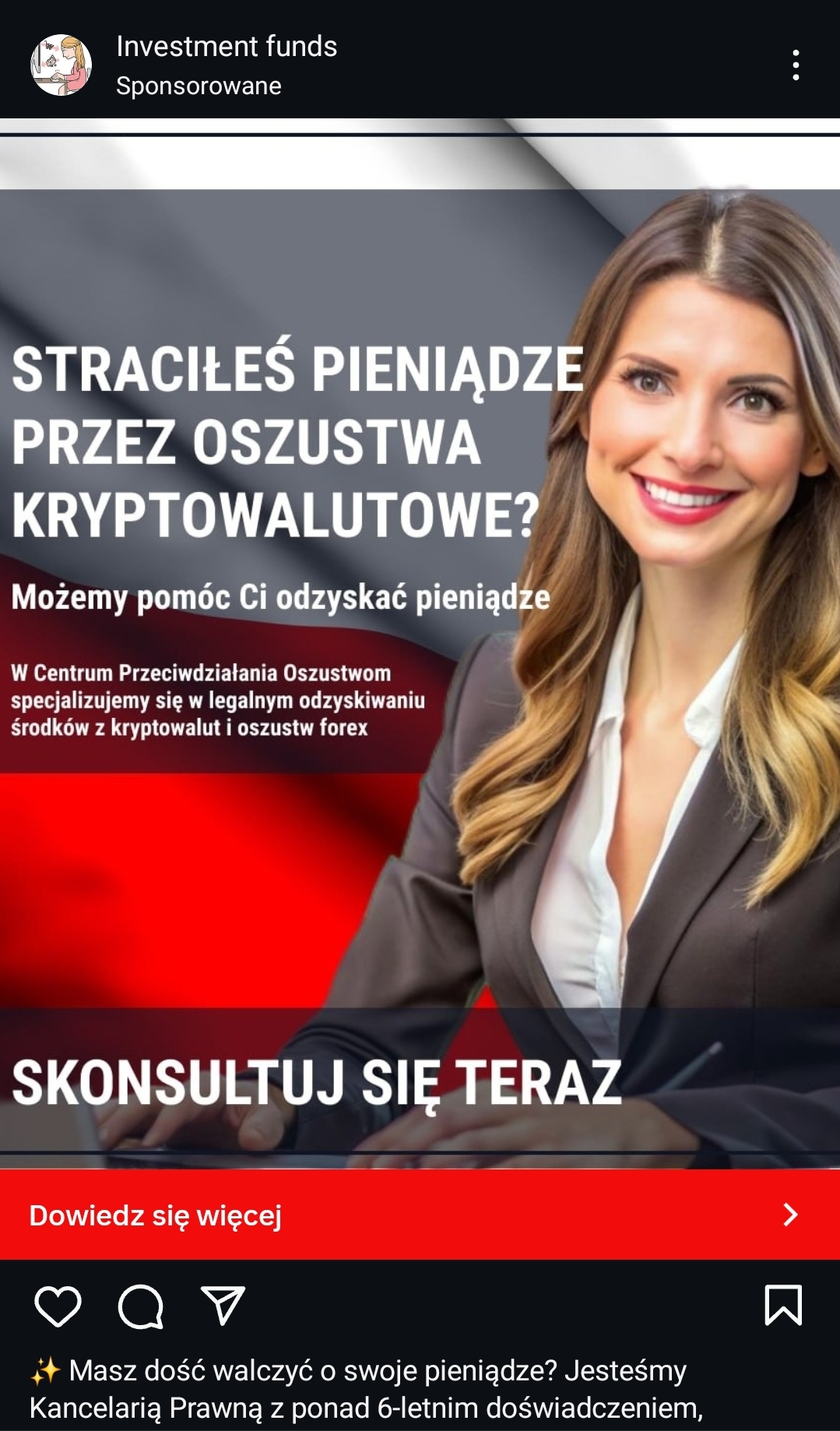

Even if these limitations were addressed and consent obtained on a larger scale, scammers could still shift tactics. They might simply avoid using protected images and instead rely on other forms of deceptive content. For example, many scams currently in circulation promote fake investment platforms by naming them directly (e.g., "Immediate Edge", "Immediate Pro Capex") and luring users with promises of large profits. Similarly, there is a noticeable presence of ads for fake legal services, allegedly offering to recover lost funds, which often use fictional or generic names like “Fraud Prevention Center.”

Platform moderation and hiring additional Polish-speaking moderators

As part of the ongoing discussions with Meta, we were provided with an escalation form — a tool also utilized by other NASK departments, such as the Disinformation Analysis Center. This form enables us to quickly alert Meta moderators to emerging threats. Reports submitted through this channel are prioritized for review. According to Meta representatives, these submissions serve as important signals that contribute to their internal analyses.

This reporting method requires specialists from our CERT Polska team to accurately identify the content, which involves obtaining the correct link or identifier for the ad or post on Facebook. hen we receive only a screenshot or a link to the landing page, we rely on tools like the Ad Library to retrieve the necessary identifier. Meanwhile, regular users can report such content directly on the platform in a much more straightforward and user-friendly manner.

During the discussions, we tried to convince Meta of the need to better respond to reports from regular platform users and to pay more attention to moderation. Tools like the escalation form proposed to us are useful for alerting Meta moderators to new threats, but they are not tools that can effectively combat fraud on the platform scale. Drawing on our experience as an incident response team, we emphasized the immense value we see in identifying threats based on user reports, which are the main driving force behind initiatives like the Warning List.

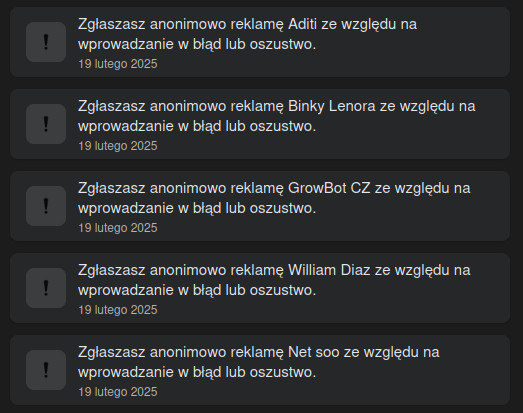

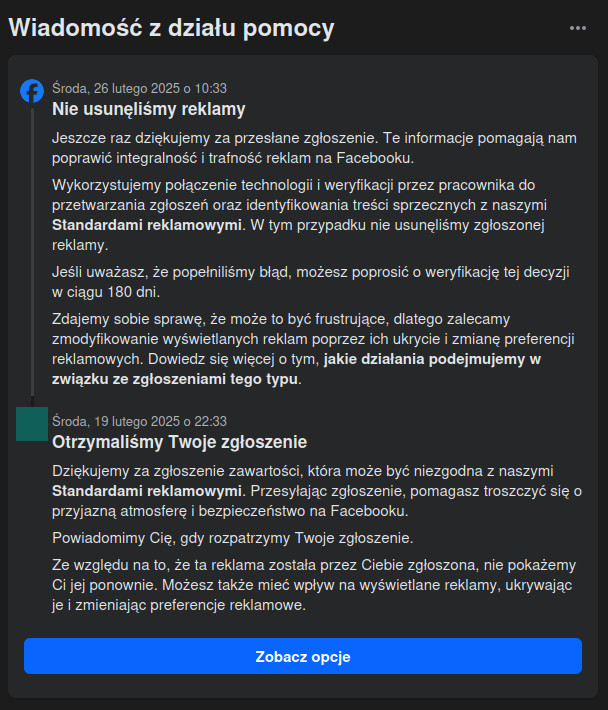

At the same time, we continuously verified whether the response to reports from regular accounts was improving. Unfortunately, in most cases, reports are closed after 7 days with the status "We did not remove the ad".

Among our expectations, we also included the expansion of the team of Polish-speaking moderators. However, we did not receive specific information on this matter.

Blocking accounts that publish harmful content

We also addressed the issue of proactively blocking accounts and pages that publish fraudulent ads during our discussions with Meta. According to the company, they employ a "strike" system — where initially, only the reported content is removed, and the user receives a warning. Account-level restrictions, such as blocking the ability to run ads, are only applied after repeated violations. Meta also stated that in exceptional cases, it carries out proactive measures that can lead to the mass removal of certain types of content.

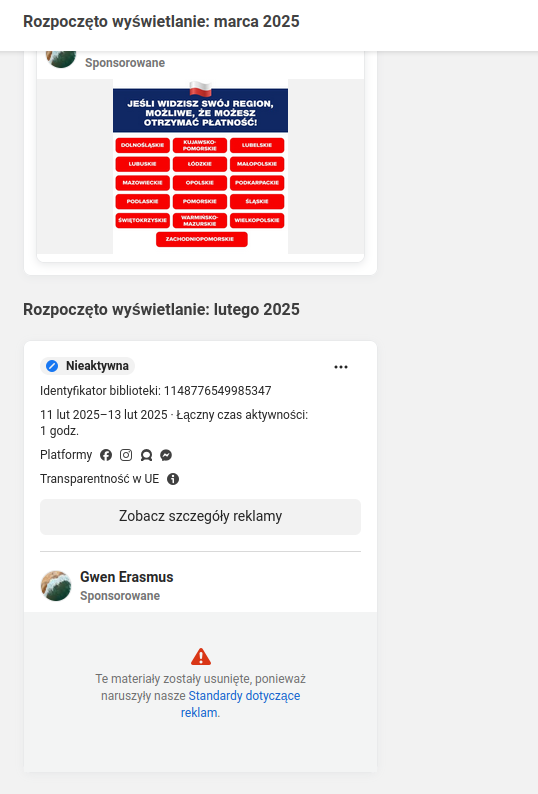

For instance, the page Gwen Erasmus ran ads in February 2025. During moderation, only the ads were taken down, and no restrictions were placed on the page itself. It wasn’t until March 2025, after the fraudulent ads were reposted, that the page was finally removed. The status of such profiles and their content can be monitored through the Ad Library. These types of pages typically have a short lifespan and are used exclusively for advertising, without publishing any other types of posts.

The use of the so-called "strike" system is understandable for typical user accounts. However, Meta has also adopted this principle for fake pages that, like the one discussed above, are created with the intent to deceive.

In our view, applying the same moderation rules across the board significantly slows down the platform’s response and hinders efforts to effectively limit the reach of fraudulent ads. Unfortunately, Meta has yet to address these concerns.

At the same time, despite the blocking of the Gwen Erasmus page by Meta moderators, reports regarding related ads made from a regular user account were not resolved. This suggests that the issue of negative report status may also stem from technical issues and errors in informing users about the platform's actual response.

Implementation of the Warning List

Meta refused to directly implement the Warning List. During the discussions, Meta conducted an analysis, during which numerous questions regarding the processes related to maintaining the List were addressed to CERT Polska, to which we responded in detail. Unfortunately, we ultimately received a response that the implementation of an automatic blocking mechanism based on the domains listed on the Warning List is not planned.

In return, Meta announced the creation of a joint, global list managed by an external entity, which will be used as a signal to assist Meta moderators. We received only a non-binding declaration that our List may be considered as one of the sources. Our postulate was to pay more attention to local partners specialized in threat detection in a given region, but this expectation was not addressed.

It’s also important to note that Meta has not provided any timeline for the potential implementation of such a mechanism.

Organizing the Ad Library

In our discussions, we also shared our observations and concerns about the functioning of the Ad Library. A key issue for us was the considerable delay (sometimes up to 24 hours) between when ads are displayed and when they appear in the index. This lag makes it much more difficult to identify ads linked to user reports, particularly when dealing with newly launched campaigns. Access to tools like the Ad Library is crucial for gathering comprehensive information about the threats reported to us.

In response to our questions, Meta declared that the Ad Library was not created with real-time monitoring of dangerous ads in mind, hence its limitations stem from the assumptions made in this platform. Meta is aware that a given version of an ad in the Ad Library may be indexed only after many hours, and this time is counted from the moment of the first recorded interaction by the user. At the same time, Meta did not declare any changes to this mechanism, claiming that the problem results from technical limitations.

Summary

Three months ago, we communicated our expectations to Meta regarding improving safety on the Facebook platform. Our goal from the very beginning was to increase the safety of Polish internet users, which is why we continuously monitor Meta's actions, believing that pressure makes sense. We observe a partial change — Meta declares a fight against fraudsters by introducing new security systems, such as mechanisms using artificial intelligence. Despite the implemented solutions, the problem of ad fraud, which we have been signaling from the beginning, is still present.

Despite the declarations and noted changes above, many key expectations of CERT Polska have not been met. Fraudulent content continues to appear massively in ads, and user reports are still closed with a status suggesting no response. No plans have been presented to improve the update time in the Ad Library, declaring that this is caused by the design of the platform and its technical limitations. The company also refused to implement the Warning List, instead announcing the creation of a global list managed by an external entity, without providing a clear answer on when such a list will be established.

It is worth appreciating Meta's efforts towards improving security, but upon comprehensive evaluation, we notice that they are only temporary measures. Systemic solutions are still lacking - more decisive actions in terms of moderation, cooperation with law enforcement, and local security organizations are needed. We hope for improvement in this area.