Fraudsters on social media lure users with fake ads promising easy money from celebrities or insider access to government investment programs. These advertisements lead to harmful websites designed to trick or exploit users. Emotions grab attention, which is why some of these contents are shocking: altered images of celebrities showing them naked, beaten, in hospitals, or after accidents. Videos pretending to come from news channels or well-known media websites. All of this is designed to shock, worry, intrigue, and lead users down the path prepared by cybercriminals.

That's why CERT Polska continuously monitors and reacts to the actions of fraudsters. The identification process is greatly supported by incident reports that everyone can submit to CERT Polska via the form https://incydent.cert.pl/. As a country-level CSIRT, we operate a dangerous websites Warning List that is used by ISPs to protect users on a DNS level. In 2023 alone, CERT Polska leveraged it to block 80,000 harmful domains, including 32,000 related to fake investments. Every day, over 250 fraudulent sites are added to the list, with access blocked millions of times

It is important to stress that the only entities capable of effectively limiting the reach of fraudsters are advertising platforms owned by tech giants such as Meta (Facebook, Instagram) and Google

However, even though reporting mechanisms for harmful ads exist, in practice platforms process reports with significant delays or reject them, especially when the report comes from a regular user. As a result, the responsibility of filtering content is shifted to external entities, such as telecommunication service providers and CSIRT teams like CERT Polska and CSIRT KNF (which also assist in identifying and reporting this kind of content, especially in the financial sector).

During our observation, we noticed that the problem extends beyond just the ads themselves. Specifically, the accounts posting malicious ads are rarely blocked, allowing fraudsters to continue exploiting these accounts without interruption. This reflects a broader problem with how platforms respond to fraudulent content.

In this article we will further explain the nature of the problem, and discuss potential actions that major platforms could take to better protect their users from these threats.

Since we are a Polish CERT and we describe campaigns targeting Polish users, screenshots are almost exclusively in Polish. We provide a rough translation of deceptive content where possible.

Ad-fraud and fake investments

Large online platforms, like search engines and social media services, are increasingly being used as content aggregators and knowledge sources. These platforms employ various mechanisms (so-called "algorithms") that profile users and match the displayed content to their interests.

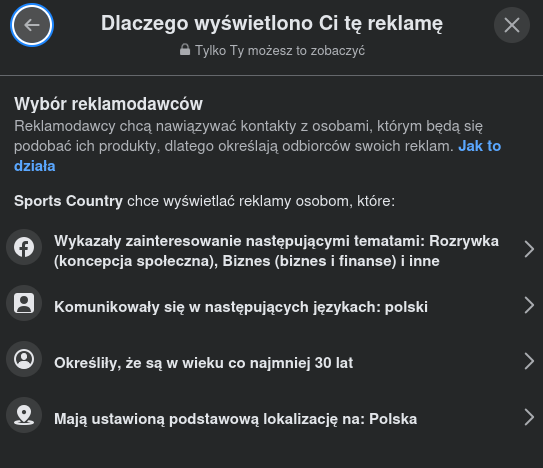

At the same time, content creators want their information to be seen by as broad an audience as possible. Therefore, platforms offer advertising services, such as "sponsored posts" allow the content to reach a wider audience for a fee. The advertising tools offered to creators provide many options to target the ads based on the age, gender, origin, or interests of the target audience.

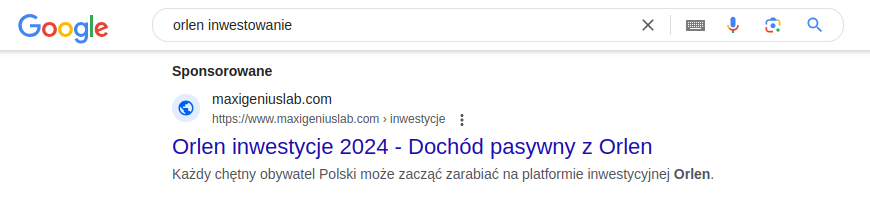

Similarly, search engines have a "sponsored links" mechanism, which positions links as the first results when searching for relevant keywords specified by the advertiser.

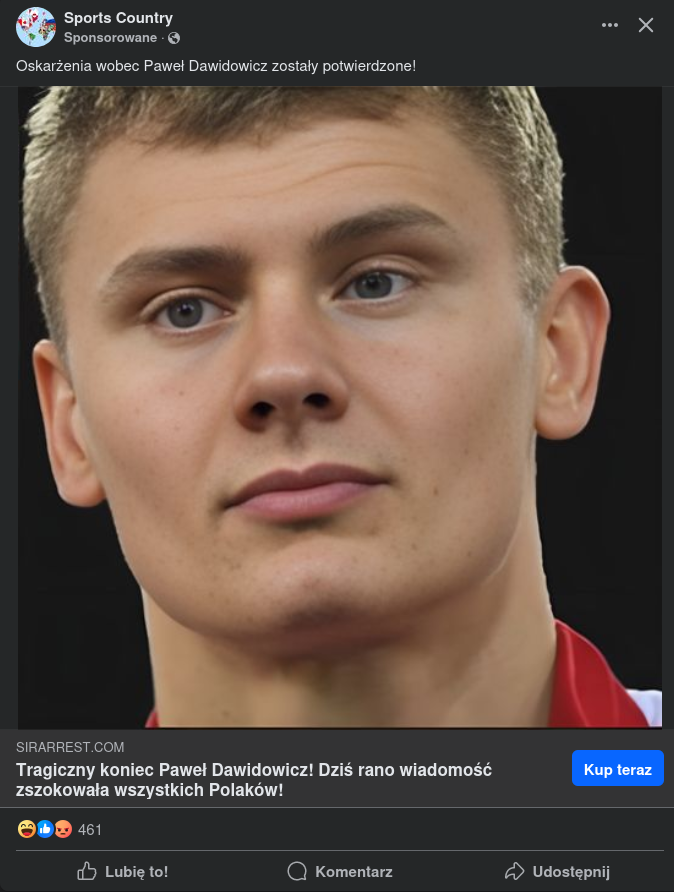

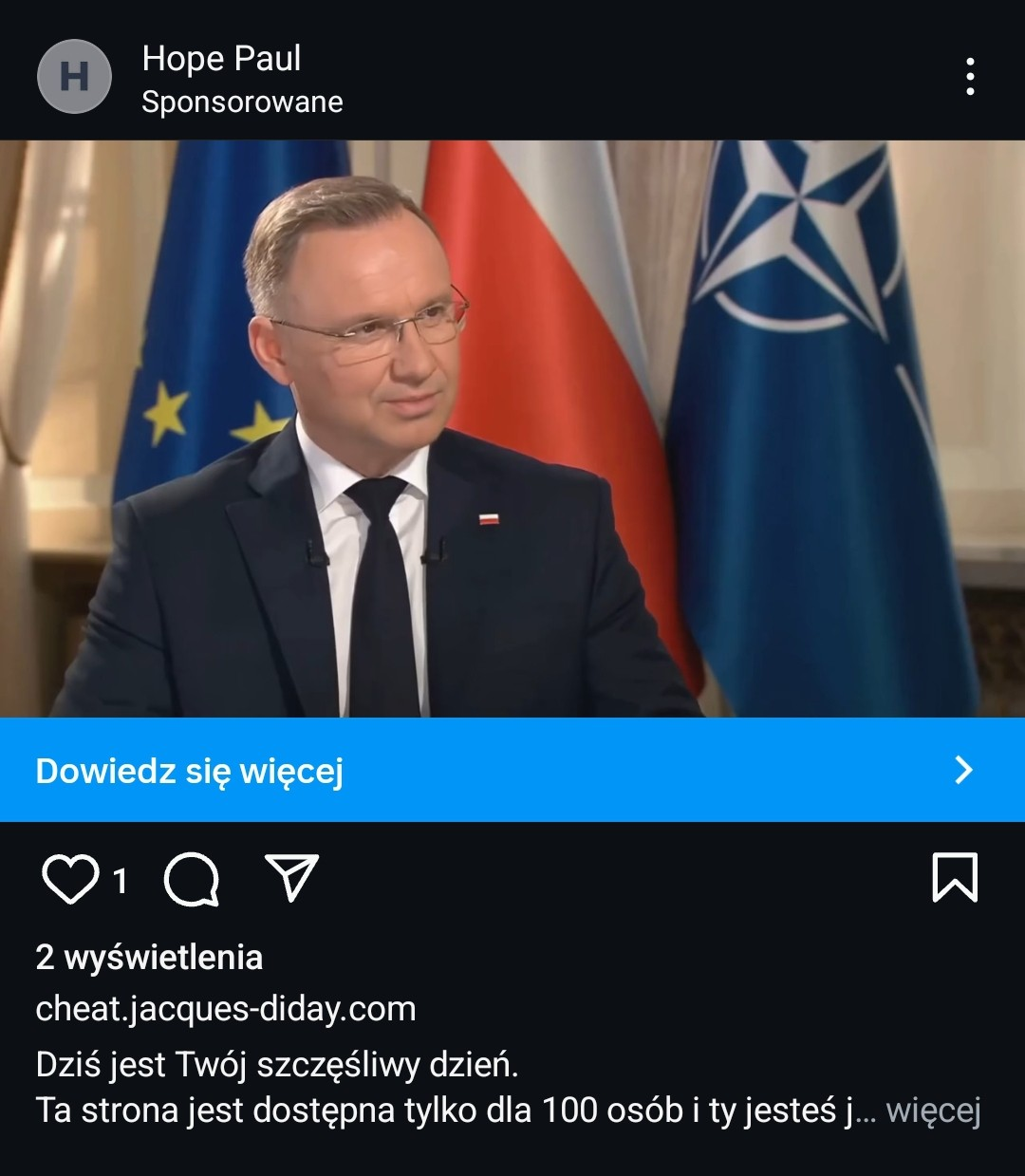

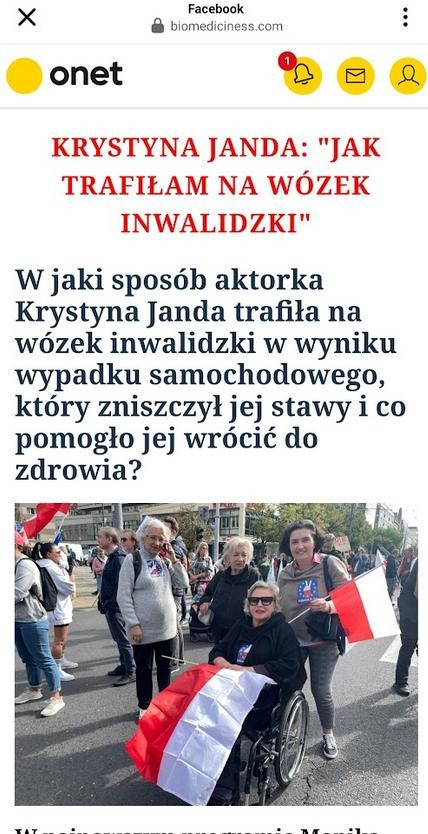

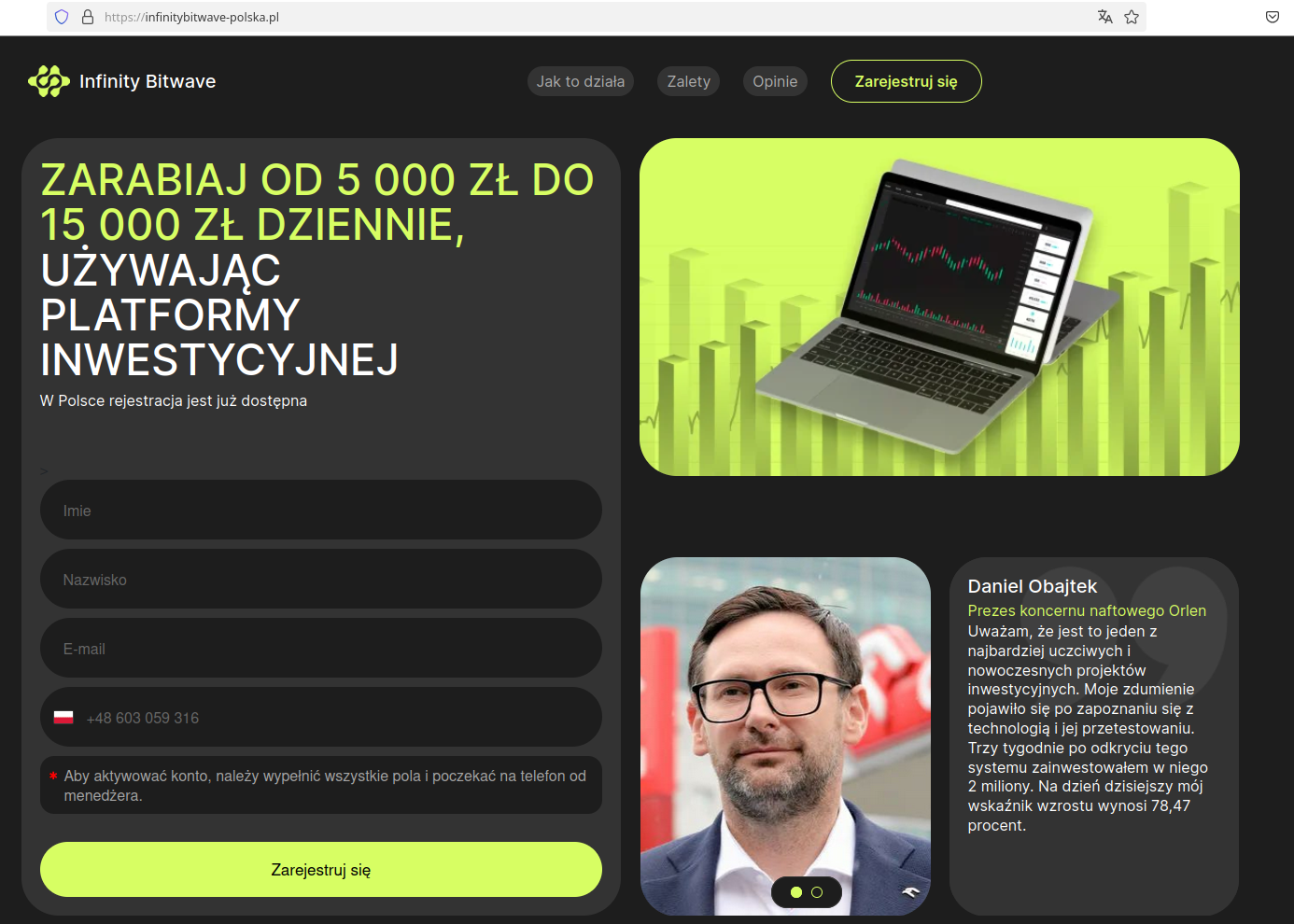

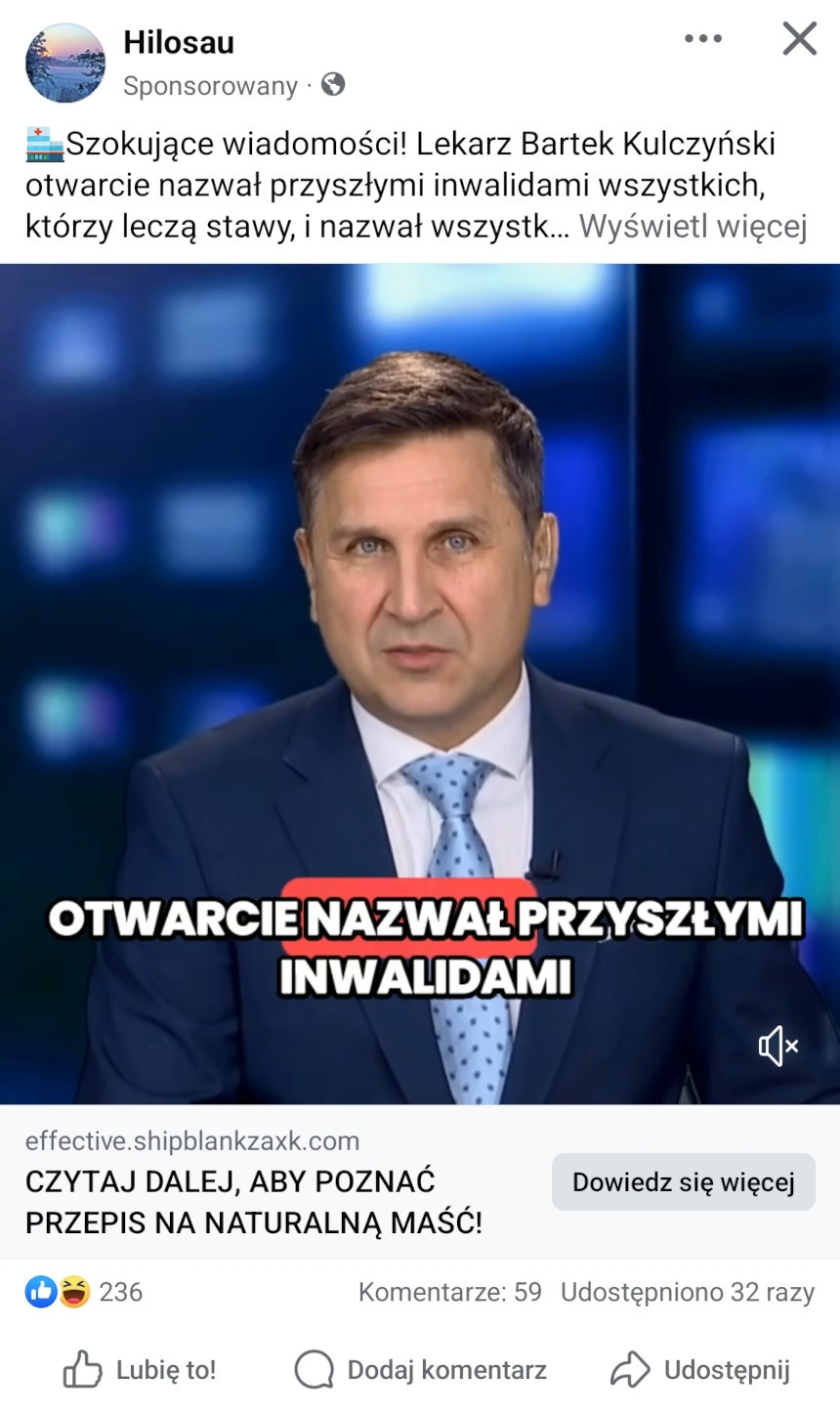

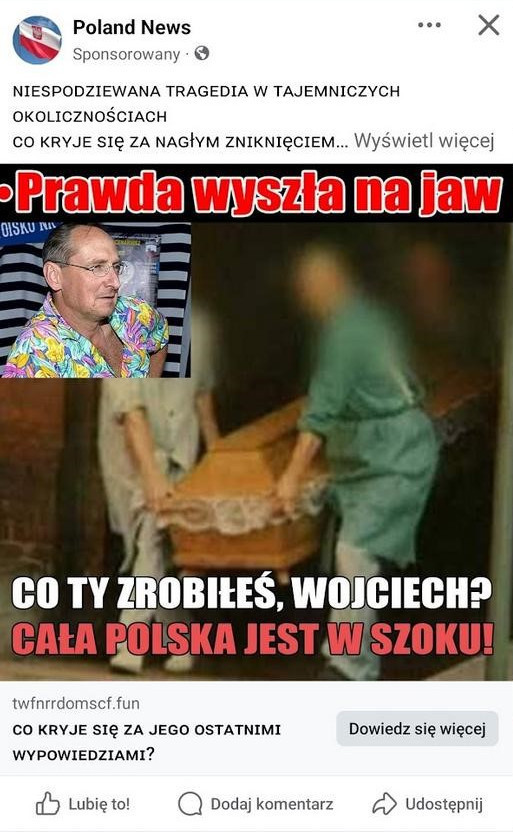

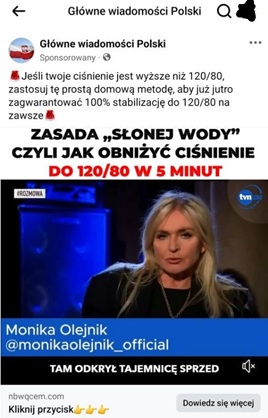

Advertising mechanisms are actively used by fraudsters, who use them to widely distribute links to fraudulent websites. One scam in this case, that is very common in Poland, involves fake investments. Posts try to entice users to click through sensational and eye-catching headlines, shocking fake news, and by impersonating well-known news portals. To increase interest in the post, fraudsters use the likenesses of famous public figures and authorities such as doctors, journalists, or entrepreneurs.

After clicking the link, user is directed to a page with information about a supposed investment and a contact form. After filling it out, a "consultant" calls the provided phone number, encouraging the user to sign a contract, register an account on a fake platform, and transfer funds.

In the later stages of the scam, users are encouraged to install software that allows remote access to their phone and computer. A description of the most common scam schemes can be found, among other places, on the website of KNF (the Financial Supervision Authority). As a result, victims are led to financial losses, sometimes reaching over a million złoty (roughly 250,000 euro). In addition to lost savings, victims often also have to deal with repaying loans and credits taken out by the criminals, as well as a stolen identity used to deceive other people.

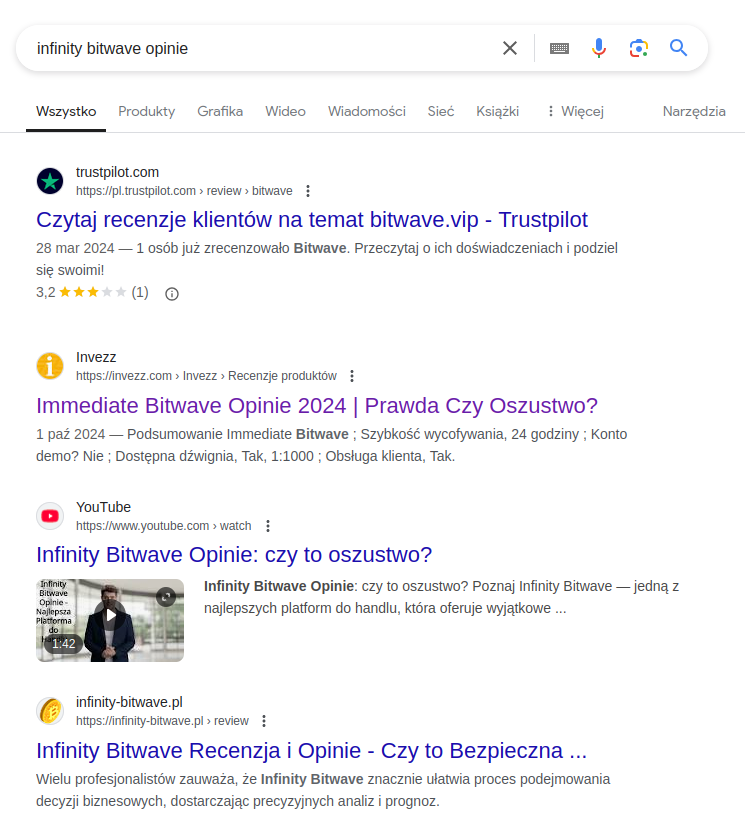

Cautious users sometimes attempt to verify whether a platform is a legitimate investment platform, for example by searching for reviews on Google. Usually, they then come across highly ranked links to fake reviews, which aim to legitimize the platform.

Many "reviews" can also be found in the YouTube platform, for example as deepfakes using voice and face of well-known Polish entrepreneurs and influencers.

The large number of ads and content that legitimize the scam makes it possible for criminals to successfully convince some users to participate in a fake investment.

CERT Polska has been warning about this issue at least since 2020, when we wrote about fake electronic banking websites appearing in sponsored links in Google search. In 2022, CSIRT KNF warned about sponsored posts on Facebook using the images of Polish football players to promote fake investments. In 2023, we warned about links in Google leading to fake investment platforms.

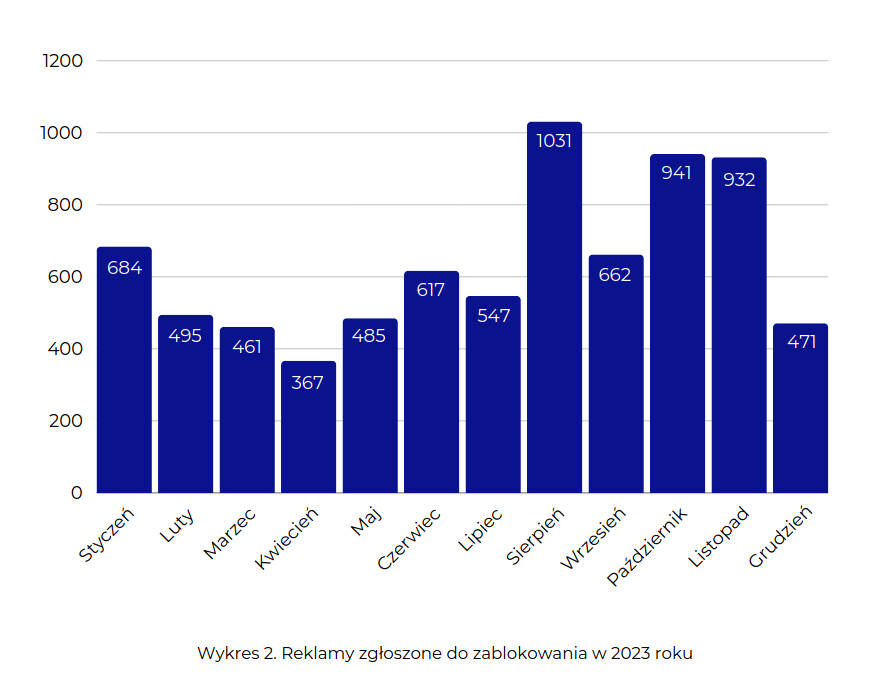

Each year, an increasing number of such incidents can be observed. In 2023, CSIRT KNF reported over 7,500 ads to platforms. In the CSIRT KNF annual report for 2023, you can find detailed statistics broken down by months and the images used in fraudulent ads.

In 2023 CSIRT NASK using the Warning List blocked over 32,000. domains related to fake investments.

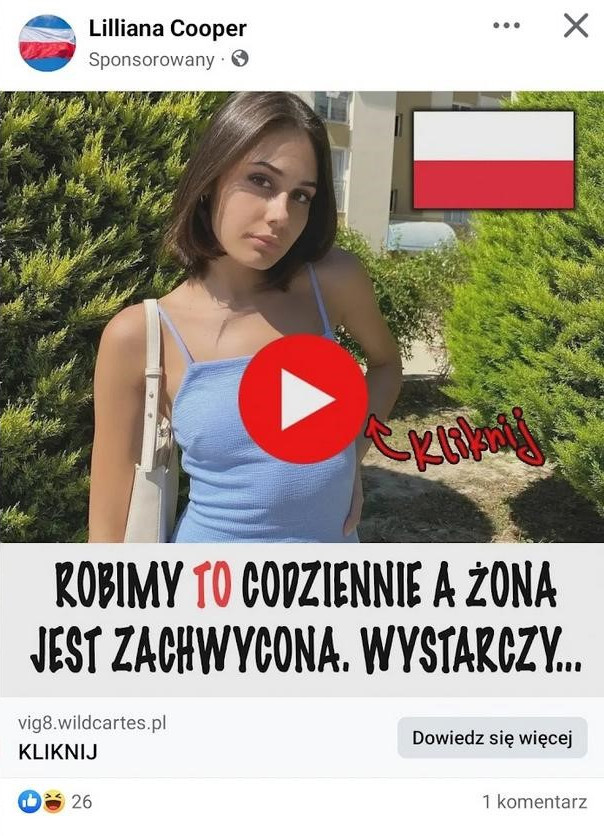

Other Campaigns Using Ads and Social Media Posts

The problem of fraudulent ads is not limited to fake investments. CERT Polska is also observing phishing campaigns aimed at tricking users into providing login details or persuading them to transfer money. Campaigns related to the sale of miracle "medications" and dietary supplements are also being observed. These ads use similar techniques to attract user attention and bypass content moderation, similar to those used in investment scams.

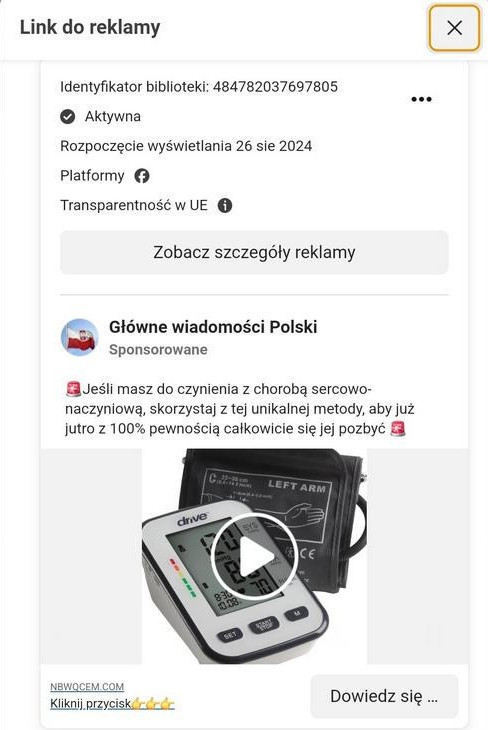

Ads for "Miracle Medications" and Dietary Supplements

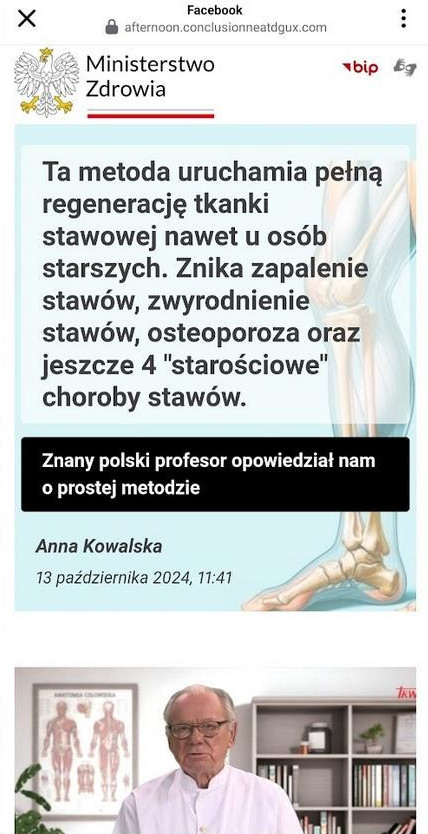

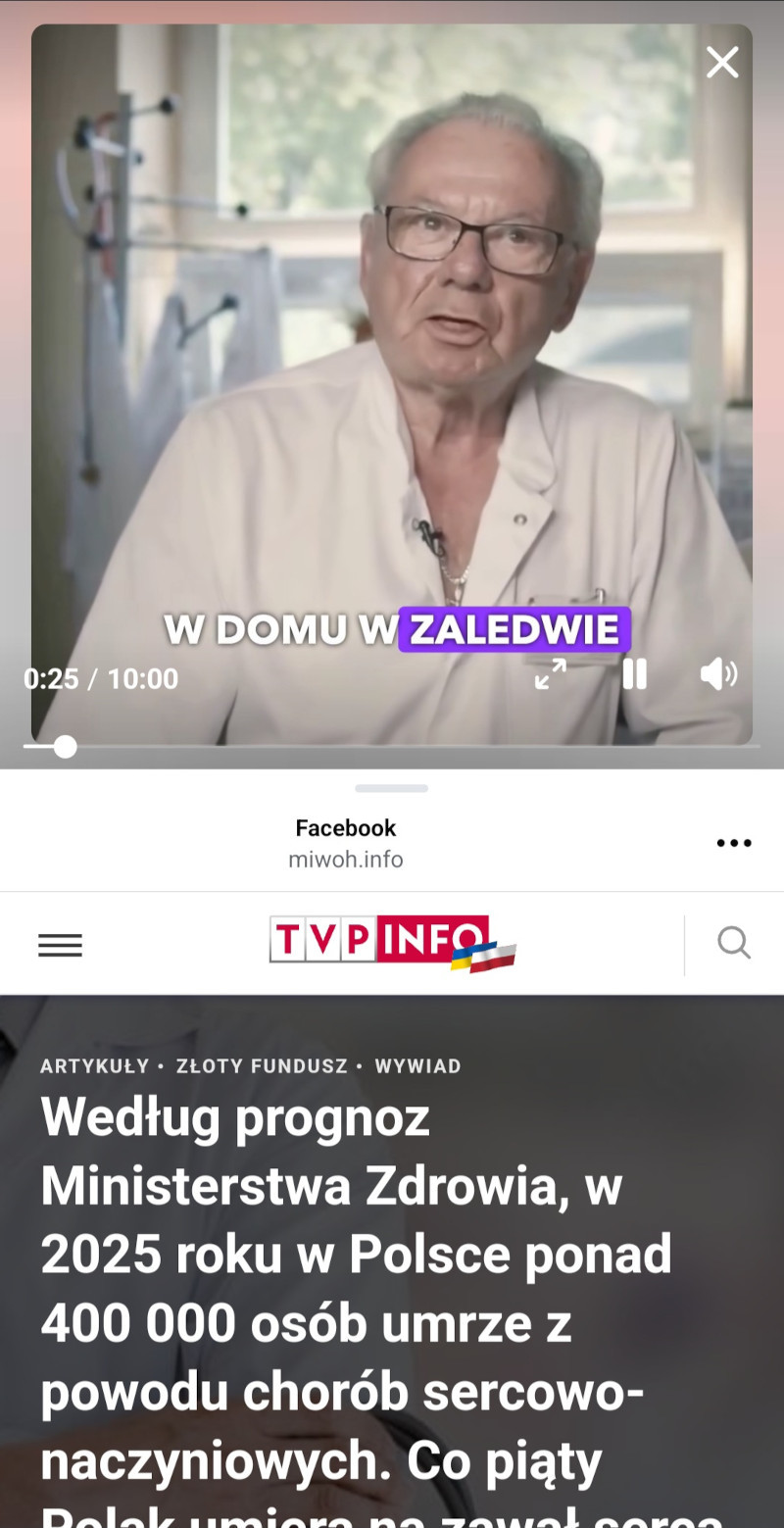

In 2024, we noticed the emergence of ads for medical products that use similar techniques as those seen in investment scams. These ads most often featured the images of doctors as medical authorities endorsing the effectiveness of a particular drug, as well as journalists allegedly reporting on the topic in a news program.

The websites that the ads led to most often imitated popular news sites such as TVP Info or impersonated official government websites, like the Ministry of Health.

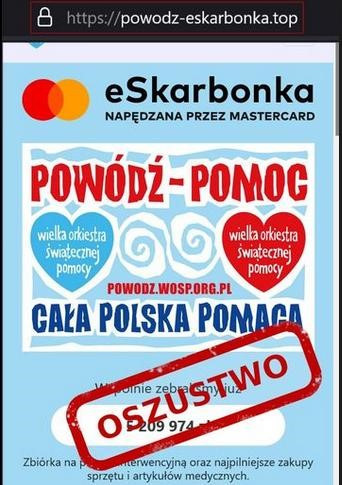

Fake charity campaigns for the flood victims

For fraudsters, there are no taboo topics, and every tragedy is an opportunity for profit. The theme of the flood also appeared in these campaigns. The disaster caused significant damage in areas such as the Lower Silesian Voivodeship, leading to the launch of many fundraising and aid campaigns. One of the campaigns, using sponsored posts, promoted a fake fundraiser supposedly organized by WOŚP (the Great Orchestra of Christmas Charity - a well-known Polish charity organisation).

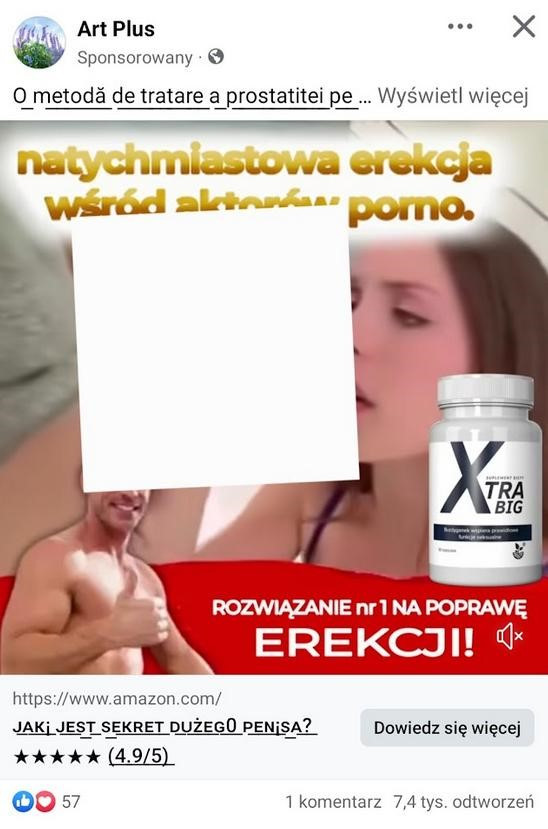

Ads using erotic and pornographic materials

We have also observed the use of pornographic material in ads promoting products for erectile dysfunction. These ads employed similar "cloaking" techniques as those used in ads promoting fake investments. Despite the fact that the ads directly featured pornographic content, they were often not blocked by the Meta platform for several weeks.

Exploitation of celebrity images in Ads on Meta Platforms

As part of the research into this phenomenon, we observed the use of over 139 public figures, including politicians, journalists, athletes, doctors, and influencers. The ads featured fabricated graphics or videos spreading false information about a supposed death, compromising situation, or uncovered conspiracy to encourage users to visit the website. This mechanism causes reputational damage to the individuals whose images were used without permission, but it is also extremely harmful to society. The trust that followers place in their idols was exploited to build authority and, ultimately, to steal from unsuspecting users, who blamed the individuals whose "faces" were used by the fraudsters for their financial losses.

Cloaking - how criminals bypass automatic filters

Platforms try to verify content, but fraudsters use a method called "cloaking", which is a set of techniques that allow them to display a different page or ad to verification mechanisms than what is shown to users.

We have observed that fraudsters use the following methods, among others:

- User-Agent header, which identifies which browser and operating system are being used to visit the site

- Referer header, which identifies the source from which the user arrived at the advertising page (whether directly or by clicking a link on Facebook)

- IP address - a known IP addresses belonging to NASK, KNF, as well as Facebook or Google, are automatically blocked.

If these parameters do not match those of a typical internet user and may indicate an analyst or bot, the user is redirected to a page that appears to be a regular, harmless portal. For example, if we click on an ad link and the page determines that we are a bot, we are redirected to a static page prepared by the fraudsters, supposedly from a business coaching team. However, if we use the appropriate browser and a more typical IP address, we are redirected to the actual website.

Cloaking Methods Used in Ad Search Engines (Ad Library)

Ads on platforms are shown to users based on the decisions of the "algorithm", which significantly complicates the search for such content in the so-called feed. Users also do not have an easy way to link to the posts or sponsored links that are shown to them. Fortunately, some platforms offer advertisement libraries, such as the Ad Library managed by Meta.

However, these platforms have known flaws that allow the display of completely different content in the Ad Library than what is shown to users.

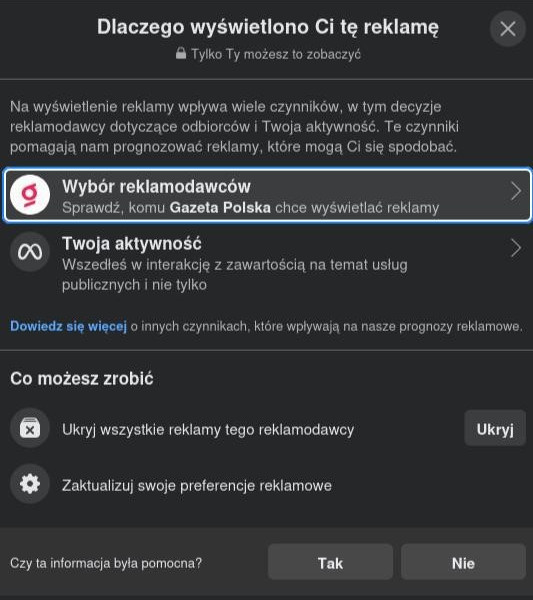

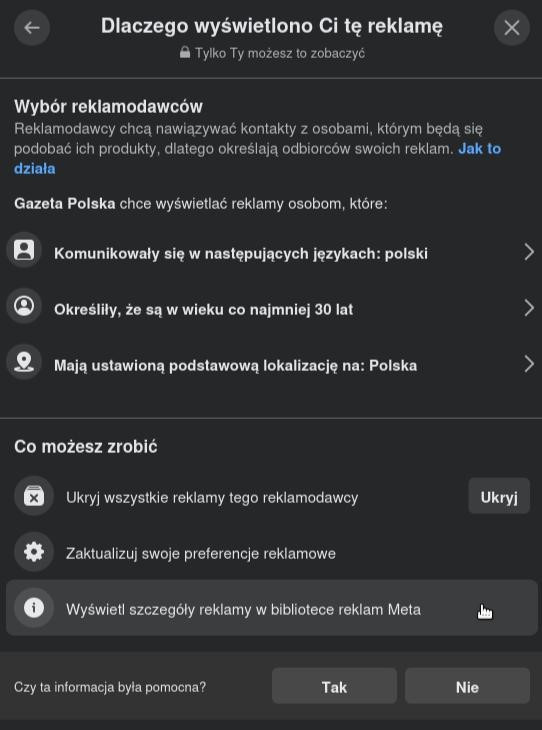

From the ad, you can access the Ad Library by selecting the option "Why am I seeing this ad", then "Advertiser choices", and finally the option "See ad details in the Meta Ad Library."

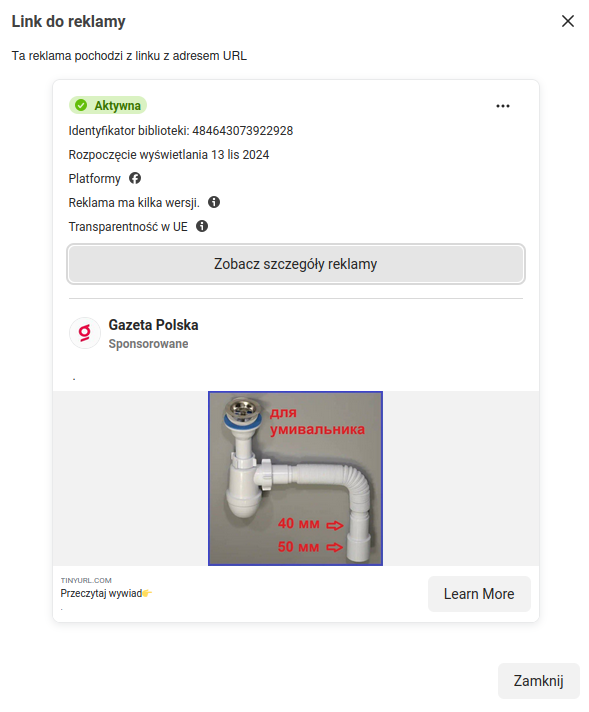

Unfortunately, the content in the Ad Library often differs completely from what is shown in the original post because the ad has several versions. The use of multiple ad versions is intended to bypass verification mechanisms. If all versions of the ad have been correctly indexed in the Ad Library, it is sometimes possible to find the correct content among the versions.

However, this is not always possible because both the ads and their new versions are indexed with a delay, sometimes up to 24 hours. At the same time, the unindexed versions are already being displayed to users. As a result, the Ad Library often shows only one version of the ad for an extended period, with an alternative graphic. Sometimes, it also happens that none of the ad versions are indexed in the Ad Library, even though the ad is being displayed to users. In such cases, the user of the ad library receives a message stating that the ad is not yet available.

The ability to find an ad 24 hours after it has been added is definitely too late. According to statistics, over 50% of phishing campaign victims provide their data within the first hour, and the average duration of a campaign - from the first to the last victim - is 21 hours. Response time is critical, which is why, for example, we recommend updating our list every 5 minutes.

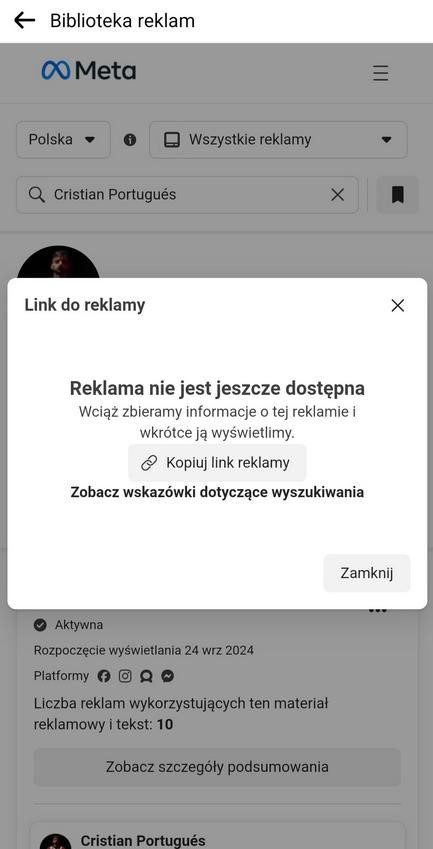

New ads appear every day, often using the same pages within the Facebook platform. On the Ad Library platform, after unchecking the "Active Ads" filter, it is often noticed that older ads have been found to violate the Ad Standards, but the profile still has the ability to publish them..

Cloaking Methods Involving Content Manipulation in Ads

Fraudsters also actively attempt to deceive verification algorithms. One of the techniques used involves placing the actual content within a larger image. Then, using features offered by the Ads Manager, only the portion containing the ad is shown to the target users.

A technique used in the case of videos is interspersing the video with a single frame unrelated to the content of the ad, making it harder to find the correct version of the ad in the Ad Library. Another method used is creating very long videos (e.g., 30 minutes long), where the main content appears at the beginning of the video, and the rest consists of a looped static image or an unrelated, looped video.

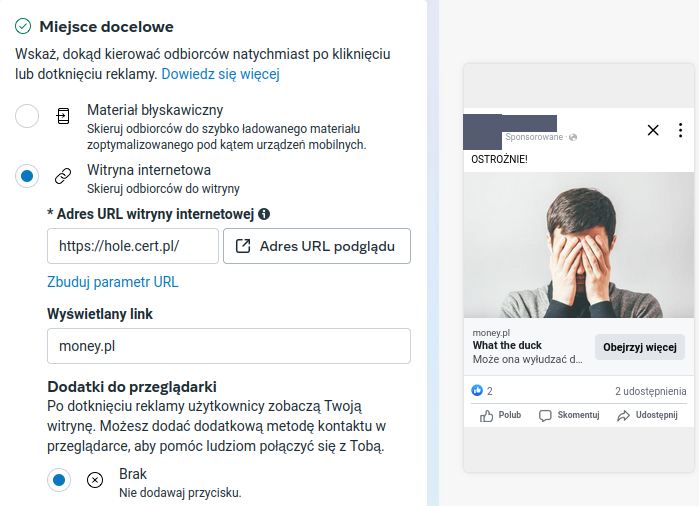

The advertising mechanisms on the Facebook platform also allow for displaying a different domain in the ad summary than the one the user is actually directed to. This is actively exploited by fraudsters, who place a credible domain in the ad, such as gazeta.pl.

At the same time, despite showing gazeta.pl, users are redirected to a link containing a different domain. We have observed that placing a different displayed link than the actual destination is a feature of the Ads Manager, which allows setting a different displayed link without verifying whether it is actually related to the target address.

Platform Responses to User Reports

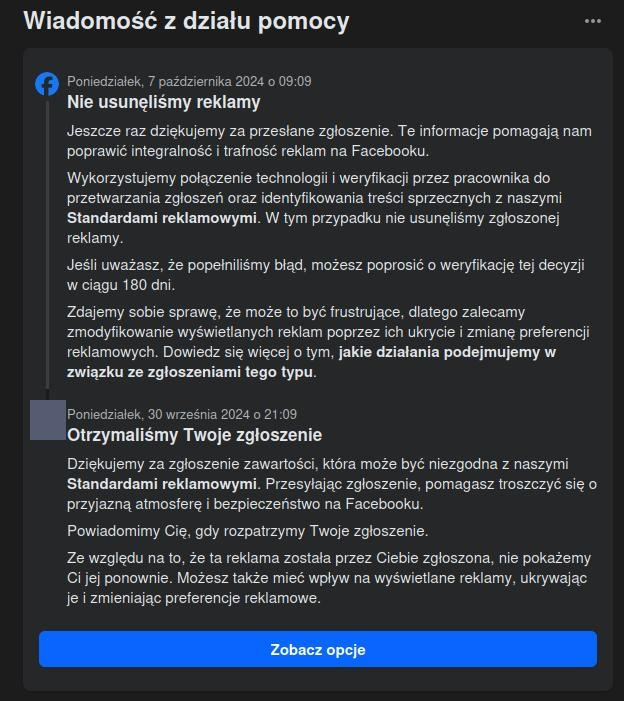

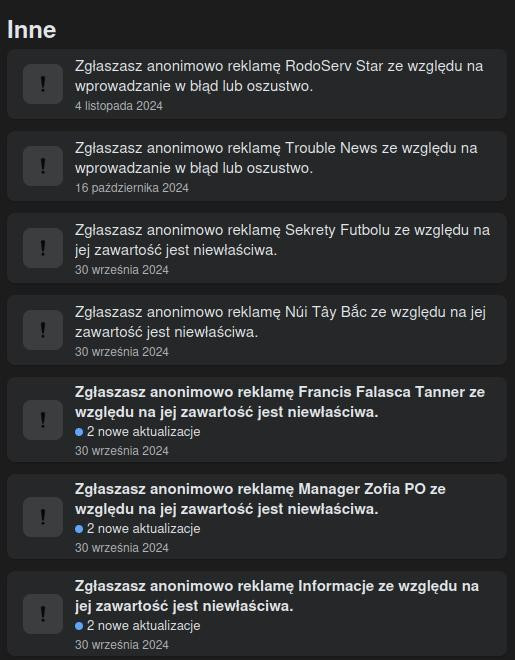

Platforms have the option to report malicious ads. Meta platforms allow users to select the "Report Ad" option, which enables them to report fraudulent content. At the same time, users report to us that their submissions to Meta are often not accepted and are closed after 7 days with the status "We did not remove the ad".

In order to verify this, from January to November 2024, we tested reporting ads that we considered fraudulent from the standard Facebook user account. Out of 122 such malicious ads reported, only 10 were removed. Specifically, in 106 cases (86.8%), the reports were closed with the status “We did not remove the ad”, in 10 cases the ad was removed, and in 6 cases, we did not receive any response.

Similar campaigns observed in different countries

Fraudulent campaigns using large online platforms are not limited to Polish users. Numerous media reports indicate that similar activities have been observed practically all over the world, including in the Baltic countries (Lithuania, Estonia), Denmark, Australia, New Zealand, Japan, and South Korea.

- https://www.theguardian.com/technology/2024/oct/02/more-than-9000-scam-facebook-pages-deleted-after-australians-lose-millions-to-celebrity-deepfakes

- https://www.euronews.com/2024/04/11/danish-celebrities-report-meta-to-police-over-fraudulent-ads

- https://www.lrt.lt/en/news-in-english/19/2143013/lithuania-to-turn-to-ec-as-meta-ignores-calls-to-deal-with-scammers-on-social-media

- https://koreajoongangdaily.joins.com/news/2024-04-01/business/industry/Google-to-crack-down-on-scam-ads-impersonating-celebrities/2015565

- https://www.japantimes.co.jp/news/2024/04/25/japan/crime-legal/meta-sued-in-japan-for-celebrity-scams/

It's especially important to highlight the analysis done by a debunk.org portal, that described various cases of media and celebrity abuse happening on the Meta platform.

- https://www.debunk.org/the-largest-disinformation-and-scam-attack-ever-recorded-in-lithuania-part-i

- https://www.debunk.org/the-large-scale-scam-attack-also-exploits-well-known-lithuanian-and-foreign-personalities-part-ii

Summary

CERT Polska observes an increasing use of advertising platforms by fraudsters to distribute fraudulent content. This is a challenge because fraudsters have learned to exploit the technical weaknesses of these platforms to bypass built-in moderation mechanisms.

The growing intensity of threats related to advertisements over the years indicates that this issue is not being properly addressed by platform owners. Meta regularly publishes reports showing that over 99% of fake accounts are detected by Meta, with only a small fraction being reported by users. Numerous collaborations are also being made with institutions fighting cybercrime and disinformation, such as the FIRE initiative, a partnership between Meta and UK banks.

At the same time, we see that user reports are not being processed with due diligence, which could be the reason for their small percentage in the published reports. Transparency mechanisms such as Ad Libraries have known flaws that are widely exploited by criminals, causing the content of the ad not to be visible in the Library or showing a different content than what is actually displayed. These advertising contents are also confirmed in sponsored links that appear in response to relevant keywords in Google search.

One of the solutions that could be helpful in detecting malicious websites in Poland is the Warning List, which has been used since 2020 by telecom operators to block access to malicious websites. Each click on an ad often goes through a "tracking link" before the user is redirected to the final page. If the tracking link did not allow redirects to links with domains on the Warning List and automatically blocked ads directing to such links, it would significantly limit the effectiveness of campaigns run by fraudsters.

In cooperation with other CSIRTs, we strive to keep platform owners informed about threats distributed through ads. However, our actions will not be fully effective without proactive involvement from the other side. At a declarative level, it seems that this cooperation is going well. However, looking at the many deceived Poles and the often tragic stories behind these situations - people losing their life savings - we may feel that our voice, as well as the voice of citizens who trust the platforms they use, is not being heard loudly enough.

Other examples of fraudulent ads